This article discusses the main challenges of implementing artificial intelligence in government procurement and provides recommendations for addressing them. Institutional structures can leverage innovations in procurement by taking into account the specificities of artificial intelligence systems.

Keywords: artificial intelligence, government services, machine learning, government institutions

Government institutions around the world have a tremendous potential to harness the power of artificial intelligence (AI) to enhance government operations and citizen services. However, governments may lack experience in procuring advanced AI solutions and may exhibit caution in adopting new technologies.

International organizations such as the World Economic Forum (WEF), along with companies like Deloitte and Splunk, have developed a set of steps for the utilization of AI in government procurement in their guide called "AI Procurement in a Box: AI Government Procurement Guidelines."

This article will examine the key aspects recommended to countries for the integration of AI into their government procurement system.

What is artificial intelligence? There is significant uncertainty regarding the nature of artificial intelligence (AI) systems and even what should be primarily considered as an algorithm. For example, a recent "algorithm" used by Stanford Medical School to allocate COVID vaccine doses essentially comprised a human-developed set of decision criteria rather than a complex system trained on available data. To discuss procurement-related issues, it is helpful to consider AI in a broader sense as "Automated Decision Systems" (ADS). These decision-making systems encompass "any systems, software, or processes that use computations to support or replace governmental decisions, judgments, and/or policy implementation that affect capabilities, access, freedoms, rights, and/or safety." The fact that these systems are increasingly being integrated into or even replacing governmental functions worldwide is crucial to understanding why they present significant challenges to current procurement processes. AI systems are typically optimized for specific goals, and the degree to which those goals align with their intended functions can vary significantly. They have the potential to improve the processes of governmental institutions and their interaction with citizens, such as using chatbots to facilitate communication and information retrieval. However, they also carry risks, such as when a facial recognition system used by the police mistakenly identifies an individual as a criminal, leading to their wrongful arrest.

AI solutions that can be quickly implemented are often provided by private companies. As more and more aspects of government services are integrated into artificial intelligence systems and other privately-provided technologies, we observe a growing network of private infrastructure. As government agencies outsource critical technological infrastructures, such as data storage and cloud systems for data exchange and analysis, under the guise of modernizing public services, we see a trend of losing control over critical infrastructure and reducing accountability to the public that relies on it. Unlike private companies, which are accountable to their shareholders and driven by profit, government organizations are entrusted with considering the welfare of their entire population when providing solutions, and they are tasked with mitigating the harm caused by AI to the communities they serve.

Two problems remain:

The use of AI by the government is distinct. Governmental use of an algorithmic decision-making system entails different requirements than a product for private use. While it is expected that technology designed for public use will cater to the needs of all citizens, this is not necessarily the expectation for products created in private settings; in fact, many products are specifically tailored to a particular audience and then extended to a broader user base. This disconnect is often overlooked.

Public use carries higher standards of compliance. Technologies designed for public use are subject to different requirements and legal criteria than the majority of privately-used technologies.

"Government procurement" is one of the most heavily legislated and regulated areas of public administration. Typically, the activities related to government procurement are outlined in procurement guidelines provided by government entities and organizations. The absence of a clear procurement structure can complicate the development of accountability mechanisms that encompass all organizations.

In the "AI and Procurement Primer" textbook by New York University, the authors identify six problems:

The field of AI as a whole faces numerous terms that remain undefined. The first set of problems with definitions pertains to technologies and procedures: there is no consistent concept of AI or even an algorithm. This can hinder, for example, cataloging existing sociotechnical systems in government and hinder the development of procurement innovations specific to these technologies. Agencies and local authorities sometimes define these technologies for themselves, such as in registries or compliance reports, or as part of new regulations, but there is a lack of interagency coordination. Similarly, there are no agreed-upon definitions and procedures for assessing or auditing the impact of AI and its risks.

The second set of problems with definitions relates to legal frameworks and principles, particularly fairness. There are vastly different understandings of what constitutes fairness in the context of AI. To arrive at a working definition of fairness that is truly fair, it is necessary to involve those who have suffered from AI's unfairness.

The third set of problems with definitions pertains to metrics, especially success metrics, both for the AI system itself and the process by which it was acquired. There may be a lack of appropriate success metrics, or the success metrics may be contradictory. For example, fraud detection models measure success based on detecting anomalous behavior (e.g., abnormal for a human), which may indicate (but is not limited to) fraudulent behavior. Anyone whose credit card was frozen while on vacation would likely appreciate this distinction. These systems are not only used by banks; government institutions may also employ them to detect benefit fraud. While the technical definition of success may revolve around detecting as much fraud as possible, the practical definition of success may lie in detecting only those cases that are most likely to be fraud.

Using the same methodologies, it will be possible to calculate potentially relevant companies and filter out those with a poor reputation and a high risk of misusing government procurement.

The procurement process was designed to prevent abuses, but as a result, it contains reinforced sets of procedures that typically allow only large suppliers to meet the standards and compete for government contracts. Long-term contracts arising from these sets of procedures are entrenched within government institutions for many years and can create dependencies on the procedures.

These dependencies can also extend to the emerging field of algorithmic auditing, where large suppliers who already have contracts with government institutions add algorithmic auditing to their portfolio of services, incentivizing government institutions to contract these services through their existing suppliers. Tensions in the procurement process also arise due to bottlenecks that can exist in procuring different services, such as when delays or distortions occur at various stages of procurement (particularly in the request for proposals, supplier selection, contract award, and execution). As the disproportionate impact of AI systems on individual citizens and communities becomes increasingly apparent, there is a pressing need to determine at what point in the procurement process risk and impact assessment should take place, importantly including the execution stage as well.

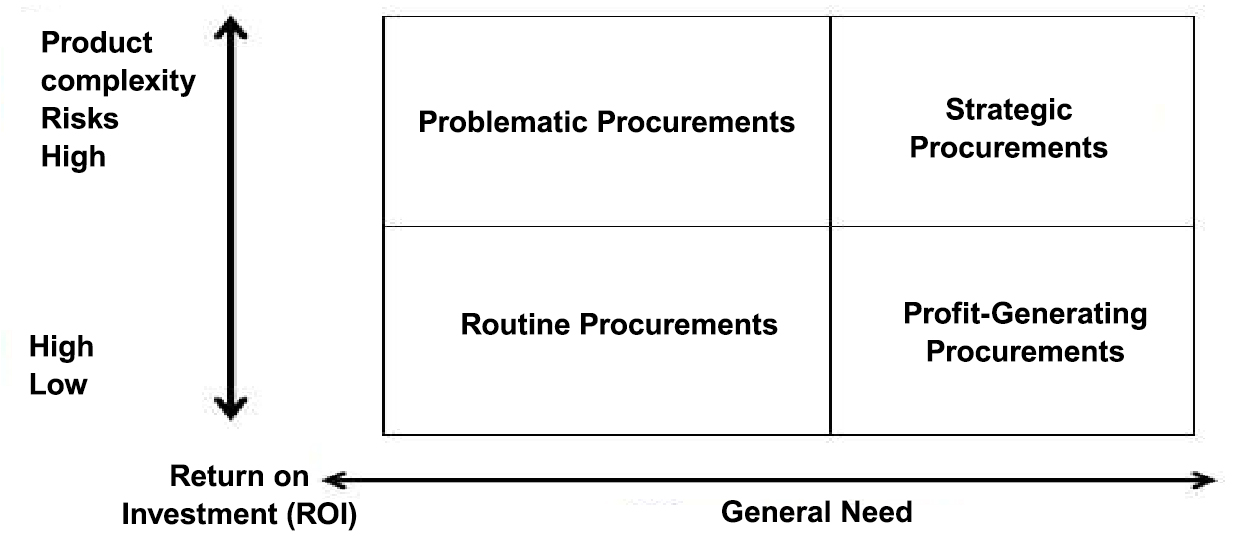

Fig. 1. Procurement Activity Matrix

As shown in the Procurement Activity Matrix, different types of tasks require varying levels of expertise and work volume. As the procurement process undergoes restructuring, it is necessary to identify points at which public participation and informed discussion can occur and be integrated.

The incentives underlying both the procurement process as a whole and the various organizations involved in procurement can undermine the establishment of AI impact assessment structures. Sellers are driven by capitalist incentives and focus on generating profit by offering their services to government organizations. The primary responsibility of most suppliers lies with their shareholders, rather than with the clients or the people who use their services and systems. This means that current procedural and cultural procurement settings are supported by incentive structures that do not promote measures to protect the population or mitigate algorithmic harm, not least because they potentially slow down the procurement process.

At this stage, the incentives of the government and the suppliers are effectively aligned: both are interested in quickly resolving the problem and transferring it through the contract once it is identified and budgets are approved. Both parties are also interested in presenting the technological solution as the most effective approach to any given problem. Government officials tasked with managing the procurement process may also lack an incentive to change the procurement process to account for potential AI technology harm. Their task is to procure as efficiently as possible, and there is no organizational or career reward for altering existing procedures.

The institutional structure of government can pose challenges to innovations in procurement that consider the specificities of AI systems, especially when it comes to problem-solving timelines and implementing such innovations. According to former U.S. Chief Data Scientist DJ Patil, government policy is typically developed over a span of 10 years. Policies aim to exert influence during their (much shorter) tenure, often with the goal of re-election. The industry moves at an even faster pace. Policy and industry converge in speed when decision-makers are faced with the need to address problems within their jurisdiction, which may not necessarily align with government's organizational priorities and timelines.

Another issue is that the "government" is often perceived as a monolithic entity, excluding alternative forms of governance from the discussion and, consequently, from innovation implementation efforts. Additionally, current procurement practices can undermine and erode infrastructural sovereignty.

Procurement serves as a gateway for the adoption of technological infrastructure and, therefore, has long-term implications for cities, communities, and the agencies themselves. Due to limited capacity and/or resources to develop their own technologies, procurement entails the establishment of large-scale technological infrastructure, including AI, through private suppliers. This dynamic hinders transparency rather than promoting it: often protected by trade legislation, private suppliers are not obliged to open the "black box" and share information about their training data or models. Consequently, the promised outcome or narrative is often considered more important than the technological foundation. These promised outcomes and narratives often serve as a meeting point for agencies and the private sector, prioritized over establishing accountability in the procurement process, for example, in the context of climate emergencies and "clean technologies" or technologies deployed for public health management during the COVID-19 pandemic.

AI systems implemented in such a manner that they can harm communities, for example, through excessive surveillance and policing, become infrastructural and are therefore unlikely to be dismantled, even if the harm is proven, such as in the case of smart sensors installed in streetlights. Increasing transparency and oversight in the procurement process (as opposed to focusing on technological prospects) can prevent the deployment of AI infrastructures that may be harmful by design. Agencies need resources to enhance literacy and expand capabilities regarding the consequences of acquiring AI systems, which includes knowledge sharing among agencies.

The growing need to develop and ensure accountability structures in the context of procurement and deployment of AI systems by government institutions implies that these processes should be restructured to enable government agencies to understand the compromises and benefits of AI systems more quickly and confidently. Sufficient time should be allocated to identify and document the problem that the AI system should address and how it should address it. Affected communities must be heard. New legislation on artificial intelligence at both national and international levels (e.g., the EU's new AI regulation) should be effectively adopted and translated into changes in AI development, procurement, and usage. Working towards this process can create space for the development of the concept of collective accountability through AI procurement innovations, where government institutions, as buyers, can expand their authority by demanding accountability and transparency from suppliers and where agencies can revisit and iterate when issues arise. This new definition should serve as a reminder that political decisions are often encoded in the definition and construction of AI systems, and therefore, the procurement of these systems should consider nuances and account for other political definitions.

Innovations in procurement are not possible without considering the legal implications, particularly those related to protecting purchasing organizations from liability. Government agencies often adhere to higher standards regarding their services and outcomes compared to private companies, and obligations are often seen as pure risks rather than an adaptive foundation for risk management. Furthermore, the influence of AI systems creates new complexities that challenge existing practices and accountability regimes. Currently, there are hardly any significant legislative safeguards to protect against the emerging discriminatory impacts of AI systems, such as human rights violations or anti-discrimination laws. Even when legal obligations concerning AI or other technologies apply to government institutions, these obligations are not necessarily taken into account by private companies when developing and testing technological products for use in the public sector. Instead, companies typically exploit spaces with limited citizen and government sector protections as a testing ground without accountability. Technologies tested in these liability-free spaces are then deployed by local agencies. Moreover, inherent uncertainty regarding the capabilities and functionalities (ex ante) and impact (ex post) of AI systems may require a reassessment of the distribution of obligations along AI supply chains. Direct contractors of government institutions may have multiple different suppliers, raising the question of who is responsible for the performance of the deployed AI system. Key measures that can help achieve greater clarity regarding the distribution of obligations along this AI supply chain include enhancing clarity in applicable contracts with suppliers (e.g., liability allocation, warranties, clarity on trade secret protection or incident insurance), thorough supplier vetting, post-deployment monitoring, and quality standards.

The handbook also outlined a series of key actions for implementing AI in government procurement:

Improving communication and understanding of AI systems, as well as the risks and harms they can pose, is essential. Procurement staff, policymakers, citizens, and suppliers need to have a better understanding of how specific instances of AI harm and risk are connected to larger structural issues, and vice versa. To create meaningful transparency, it is necessary to establish standards for reporting the goals and assumptions embedded in an AI system, as well as the risks and harms it may pose, along with recommendations for documenting and accounting for such reporting.

There is a need for inter-agency communication and the exchange and building of capabilities in the procurement of AI systems. It is also necessary to clearly define intra-agency responsibilities for procuring AI systems and the impact they can have on citizens and the agencies themselves. Resources should be developed and shared to support individuals and communities within agencies working on improving procurement processes to mitigate harm from AI. Similarly, assistance should be provided to communities outside of agencies that are facing harm and issues related to AI. These resources and capacity-building opportunities should be interconnected in a network of procurement staff, AI researchers, advocacy representatives, and others.

The realm of technologies that serve the public interest is expanding significantly. Government institutions are increasingly utilizing technologies, including artificial intelligence systems, in all aspects of their work. This means that there is a growing need for technologies that serve the public interest: professionals trained in both technical and social sciences who are capable of adequately assessing the social implications of constantly emerging technologies. Fortunately, among the next generation of technologists, there is also a growing desire to engage in meaningful work that considers the social impact of technology. Therefore, it is crucial that this talent is cultivated early and equitably.

At the same time, both government institutions and the private sector need to focus on creating a range of job opportunities in the field of technologies that serve the public interest, hiring and retaining diverse talents at early stages of their careers, and supporting teams working on technologies that serve the public interest both within and beyond their organizations.

Bibliography: